共计 12818 个字符,预计需要花费 33 分钟才能阅读完成。

prometheus operator默认安装时会自带一些servicemonitor,但是默认自带得servicemonitor里面没有一个重要得组件etcd监控,也许是这个组件在集群中的部署方式多样,可集群内或集群外,所以其的监控服务暴露就需要用户自行改造~~正好我们可以通过这个示例来学习servicemonitor对象的使用方式,用它来接入用户自己定义的endpoint。

博主的的集群版本是1.18,用kubeadm安装的单master节点集群,etcd采用静态pod方式部署

[root@k8s-master ~]# kubectl get pod -n kube-system etcd-k8s-master -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

etcd-k8s-master 1/1 Running 7 19d 192.168.44.151 k8s-master <none> <none>

[root@k8s-master ~]# cat /etc/kubernetes/manifests/etcd.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/etcd.advertise-client-urls: https://192.168.44.151:2379

creationTimestamp: null

labels:

component: etcd

tier: control-plane

name: etcd

namespace: kube-system

spec:

containers:

- command:

- etcd

- --advertise-client-urls=https://192.168.44.151:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --initial-advertise-peer-urls=https://192.168.44.151:2380

- --initial-cluster=k8s-master=https://192.168.44.151:2380

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://192.168.44.151:2379

- --listen-metrics-urls=http://127.0.0.1:2381

- --listen-peer-urls=https://192.168.44.151:2380

- --name=k8s-master

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

image: registry.aliyuncs.com/k8sxio/etcd:3.4.3-0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /health

port: 2381

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: etcd

resources: {}

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

status: {}

etcd 的监控服务端口为2379,我们可以使用以下方式测试验证下是否生效

[root@k8s-master ~]# curl -k -XGET https://192.168.44.151:2379/metrics --cert /etc/kubernetes/pki/etcd/ca.crt --key /etc/kubernetes/pki/etcd/ca.key

# HELP etcd_cluster_version Which version is running. 1 for 'cluster_version' label with current cluster version

# TYPE etcd_cluster_version gauge

etcd_cluster_version{cluster_version="3.4"} 1

# HELP etcd_debugging_disk_backend_commit_rebalance_duration_seconds The latency distributions of commit.rebalance called by bboltdb backend.

# TYPE etcd_debugging_disk_backend_commit_rebalance_duration_seconds histogram

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.001"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.002"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.004"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.008"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.016"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.032"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.064"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.128"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.256"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.512"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="1.024"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="2.048"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="4.096"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="8.192"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="+Inf"} 3604

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_sum 0.003950182

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_count 3604

# HELP etcd_debugging_disk_backend_commit_spill_duration_seconds The latency distributions of commit.spill called by bboltdb backend.

# TYPE etcd_debugging_disk_backend_commit_spill_duration_seconds histogram

etcd_debugging_disk_backend_commit_spill_duration_seconds_bucket{le="0.001"} 3601

etcd_debugging_disk_backend_commit_spill_duration_seconds_bucket{le="0.002"} 3602

etcd_debugging_disk_backend_commit_spill_duration_seconds_bucket{le="0.004"} 3603

etcd_debugging_disk_backend_commit_spill_duration_seconds_bucket{le="0.008"} 3603

#######略至此,我们检擦集群的etcd监控服务正常运行,可获取到监控数据,开始我们的监控endpoint接入步骤

为Etcd创建EndPoint对象

此处的EndPoint对象不是prometheus中的监控端点(endpoint),它是k8s里的对象资源,是svc对象里关联的EndPoint对象。该对象定义了一个subsets包含了一个address对象数组,我们可以在这里写集群内的地址,也可以写集群外的地址来组成一个EndPoint对象。查看一个集群内的EndPoint对象样例:

[root@k8s-master ~]# kubectl get ep -n kube-system

NAME ENDPOINTS AGE

kube-controller-manager <none> 19d

kube-dns 10.100.235.221:53,10.100.235.222:53,10.100.235.221:9153 + 3 more... 19d

kube-scheduler <none> 19d

kubelet 192.168.44.151:4194,192.168.44.159:4194,192.168.44.151:10255 + 3 more... 15d

nfs-client <none> 18d

[root@k8s-master ~]# kubectl describe ep kube-dns -n kube-system

Name: kube-dns

Namespace: kube-system

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=KubeDNS

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2022-08-14T08:52:10Z

Subsets:

Addresses: 10.100.235.221,10.100.235.222

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

dns-tcp 53 TCP

metrics 9153 TCP

dns 53 UDP

Events: <none>

[root@k8s-master ~]# kubectl get ep -n kube-system kube-dns -o yaml

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: KubeDNS

- apiVersion: v1

name: kube-dns

namespace: kube-system

subsets:

- addresses:

- ip: 10.100.235.221

nodeName: k8s-master

targetRef:

kind: Pod

name: coredns-66db54ff7f-jqvcg

namespace: kube-system

resourceVersion: "195931"

uid: e6473edf-dead-47c7-a480-fce50e3fe10d

- ip: 10.100.235.222

nodeName: k8s-master

targetRef:

kind: Pod

name: coredns-66db54ff7f-6nvpk

namespace: kube-system

resourceVersion: "195923"

uid: 1fef7f34-f8e8-4a19-8bdc-a2fa91f95335

ports:

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

- name: dns

port: 53

protocol: UDP参照kube-dns样例我们写一个etcd的EndPoint并创建

[root@k8s-master etcd]# cat >etcd-ep.yaml<<-'EOF'

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: etcd1

name: etcd

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.44.151 # 注意填写自己的etcd ip

nodeName: k8s-master

ports:

- name: etcd

port: 2379

protocol: TCP

EOF

[root@k8s-master etcd]# kubectl apply -f etcd-ep.yaml

endpoints/etcd created

为Etcd EndPont对象创建SVC关联

ServiceMonitor是通过svc来自动发现endpoint的,我们上面已经创建Endpoint对象,现在需要创建svc将其关联起来

[root@k8s-master etcd]# cat >etcd-svc.yaml<<-'EOF'

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: etcd1

name: etcd

namespace: kube-system

spec:

ports:

- name: etcd

port: 2379

protocol: TCP

targetPort: 2379

sessionAffinity: None

type: ClusterIP

EOF

[root@k8s-master etcd]# kubectl apply -f etcd-svc.yaml

service/etcd created

[root@k8s-master etcd]# kubectl get svc -n kube-system etcd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

etcd ClusterIP 10.96.127.44 <none> 2379/TCP 65s

[root@k8s-master etcd]# kubectl describe svc -n kube-system etcd

Name: etcd

Namespace: kube-system

Labels: k8s-app=etcd1

Annotations: Selector: <none>

Type: ClusterIP

IP: 10.96.127.44

Port: etcd 2379/TCP

TargetPort: 2379/TCP

Endpoints: 192.168.44.151:2379

Session Affinity: None

Events: <none>

至此我们已经完成etcd的svc对象创建,测试下是否可以通过该svc获取到我们etcd监控服务数据

[root@k8s-master etcd]# curl -k -XGET https://10.96.127.44:2379/metrics --cert /etc/kubernetes/pki/etcd/ca.crt --key /etc/kubernetes/pki/etcd/ca.key

# HELP etcd_cluster_version Which version is running. 1 for 'cluster_version' label with current cluster version

# TYPE etcd_cluster_version gauge

etcd_cluster_version{cluster_version="3.4"} 1

# HELP etcd_debugging_disk_backend_commit_rebalance_duration_seconds The latency distributions of commit.rebalance called by bboltdb backend.

# TYPE etcd_debugging_disk_backend_commit_rebalance_duration_seconds histogram

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.001"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.002"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.004"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.008"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.016"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.032"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.064"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.128"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.256"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="0.512"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="1.024"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="2.048"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="4.096"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="8.192"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_bucket{le="+Inf"} 7800

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_sum 0.008404902

etcd_debugging_disk_backend_commit_rebalance_duration_seconds_count 7800

#######略为Etcd 创建 ServiceMonitor

etcd的监控服务是https协议的,所以我们需要让promentheus也使用https来获取地址,但是集群的证书都是自己生成的,这样的话就需要让prometheus抓取数据是使用指定客户端服务端证书,而在servicemonitor对象中可以通过tlsConfig字段定义

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-certs/ca.crt

certFile: /etc/prometheus/secrets/etcd-certs/server.crt

keyFile: /etc/prometheus/secrets/etcd-certs/server.key用上面的方式就必须要把证书提前写入到prometheus中,哎>﹏<,得改造下了

使用secret方式挂载进prometheus内

# 如果不知道自己证书在哪个路径,可以查看etcd pod yaml文件里的etcd启动参数,里面会相应的路径

[root@k8s-master ~]# kubectl get pods -n kube-system kube-apiserver-k8s-master -o yaml | grep file

kubernetes.io/config.source: file

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --oidc-ca-file=/etc/kubernetes/pki/ca.crt

# 查看etcd证书

[root@k8s-master ~]# ll /etc/kubernetes/pki/etcd/

total 32

-rw-r--r-- 1 root root 1017 Jul 20 10:11 ca.crt

-rw------- 1 root root 1675 Jul 20 10:11 ca.key

-rw-r--r-- 1 root root 1094 Jul 20 10:11 healthcheck-client.crt

-rw------- 1 root root 1679 Jul 20 10:11 healthcheck-client.key

-rw-r--r-- 1 root root 1135 Jul 20 10:11 peer.crt

-rw------- 1 root root 1679 Jul 20 10:11 peer.key

-rw-r--r-- 1 root root 1135 Jul 20 10:11 server.crt

-rw------- 1 root root 1679 Jul 20 10:11 server.key

# 生成secret

[root@k8s-master ~]# kubectl -n monitoring create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt修改prometheus的deploy文件

[root@k8s-master manifests]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.mirrors.ustc.edu.cn/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

replicas: 1

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

# 增加以下两行

secrets:

- etcd-certs

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.15.2

[root@k8s-master manifests]# kubectl apply -f prometheus-prometheus.yaml

prometheus.monitoring.coreos.com/k8s configured

# 查看证书

[root@k8s-master manifests]# kubectl exec -it prometheus-k8s-0 -n monitoring prometheus -- /bin/sh

Defaulting container name to prometheus.

Use 'kubectl describe pod/prometheus-k8s-0 -n monitoring' to see all of the containers in this pod.

/prometheus $ ls /etc/prometheus/secrets/etcd-certs

ca.crt server.crt server.key

开始创建ServiceMonitor接入etcd enpoint

[root@k8s-master etcd]# cat etcd-servicemonitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: etcd1

name: etcd

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: etcd

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-certs/ca.crt

certFile: /etc/prometheus/secrets/etcd-certs/server.crt

keyFile: /etc/prometheus/secrets/etcd-certs/server.key

# insecureSkipVerify=true # 不校验证书

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: etcd1

[root@k8s-master etcd]# kubectl apply -f etcd-servicemonitor.yaml

servicemonitor.monitoring.coreos.com/etcd created

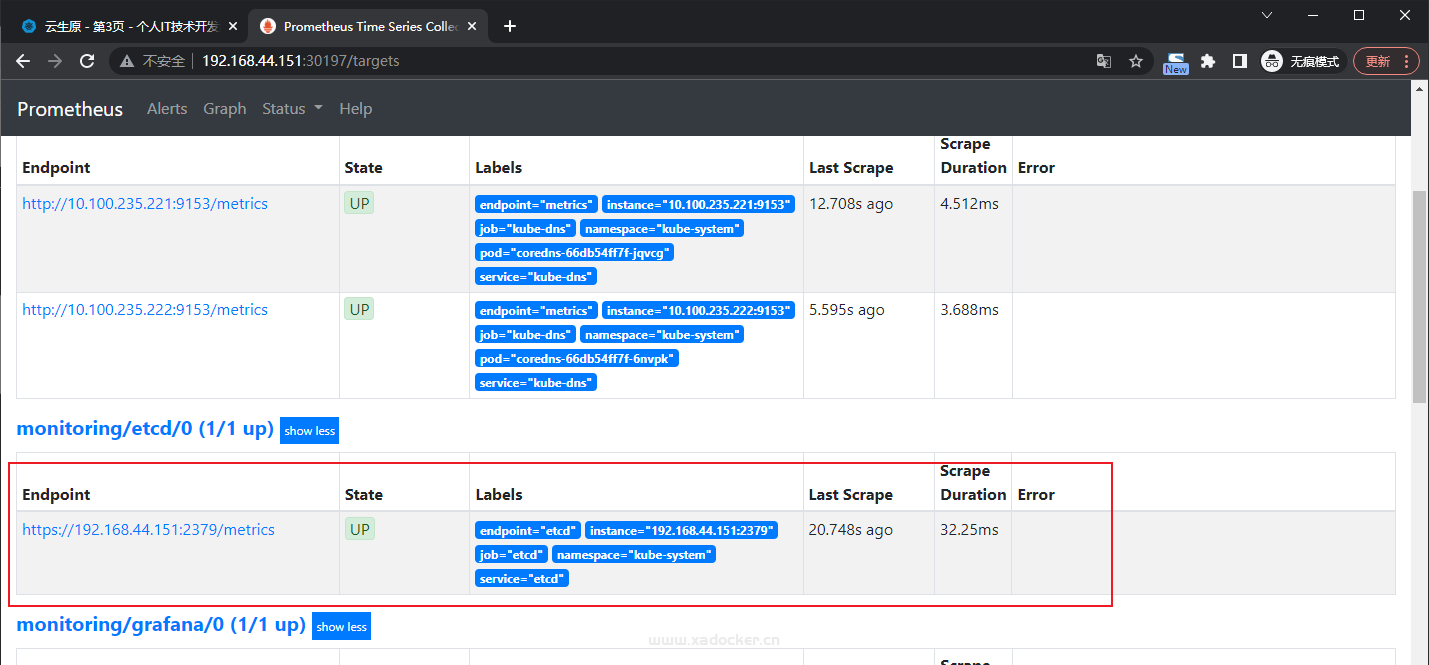

稍等片刻就可以在prometheus中查看到etcd的target endpoint

在Grafana上添加Etcd的监控面板

grafana导入面板ID: 9733

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站