共计 40380 个字符,预计需要花费 101 分钟才能阅读完成。

最近博主在用python折腾nacos,顺便写下nacos的快速构建方式

terraform创建资源

主要资源:

- 三个nacos节点

- 一个mysql节点

- 一个nginx节点

- 将本地的某用户的公钥配置到上述节点中

- 本地用模板生成ansible主机清单文件

- 创建dns记录到nginx主机

准备项目目录

xadocker@xadocker-virtual-machine:~$ cd workdir/datadir/terraform/

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform$ mkdir -p tf-nacos-cluster/ansible-deploy

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform$ cd tf-nacos-cluster/provider的ak/sk配置

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat providers.tf

provider "alicloud" {

access_key = var.access_key

secret_key = var.secret_key

region = var.region

}

provider版本配置

注意这里博主将terraform state保存在oss中,需要读者自行提前准备

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat version.tf

terraform {

backend "oss" {

profile = "terraform"

bucket = "iac-tf-oss-backend"

prefix = "cluster-manager/env-dev/nacos-cluster"

access_key = "ossak"

secret_key = "osssk"

region = "cn-guangzhou"

}

required_providers {

alicloud = {

#source = "aliyun/alicloud"

source = "local-registry/aliyun/alicloud"

version = "1.166.0"

}

local = {

#source = "hashicorp/local"

source = "local-registry/hashicorp/local"

version = "2.2.3"

}

archive = {

#source = "hashicorp/archive"

source = "local-registry/hashicorp/archive"

version = "2.2.0"

}

http = {

#source = "hashicorp/http"

source = "local-registry/hashicorp/http"

version = "3.2.1"

}

null = {

#source = "hashicorp/null"

source = "local-registry/hashicorp/null"

version = "3.2.1"

}

random = {

#source = "hashicorp/random"

source = "local-registry/hashicorp/random"

version = "3.4.3"

}

template = {

#source = "hashicorp/template"

source = "local-registry/hashicorp/template"

version = "2.2.0"

}

}

}

variables配置

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat variables.tf

variable "access_key" {

type = string

default = "xxxxxxx"

sensitive = true

}

# export TF_VAR_alicloud_access_key=""

variable "secret_key" {

type = string

default = "xxxxxxxxxx"

sensitive = true

}

# export TF_VAR_alicloud_secret_key=""

variable "region" {

type = string

default = "cn-guangzhou"

}

# export TF_VAR_region="cn-guangzhou"

main资源声明配置

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat main.tf

variable "vpc_name" {

default = "tf-cluster-paas"

}

resource "alicloud_vpc" "vpc" {

vpc_name = var.vpc_name

cidr_block = "172.16.0.0/16"

}

data "alicloud_zones" "default" {

available_disk_category = "cloud_efficiency"

available_resource_creation = "VSwitch"

}

resource "alicloud_vswitch" "vswitch" {

vpc_id = alicloud_vpc.vpc.id

cidr_block = "172.16.0.0/24"

zone_id = data.alicloud_zones.default.zones[0].id

vswitch_name = var.vpc_name

}

resource "alicloud_security_group" "group" {

name = "tf-nacos-cluster"

description = "nacos-cluster"

vpc_id = alicloud_vpc.vpc.id

}

resource "alicloud_security_group_rule" "allow_nacos_tcp" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "8848/8848"

priority = 1

security_group_id = alicloud_security_group.group.id

cidr_ip = "0.0.0.0/0"

}

resource "alicloud_security_group_rule" "allow_nginx_tcp" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "80/80"

priority = 1

security_group_id = alicloud_security_group.group.id

cidr_ip = "0.0.0.0/0"

}

resource "alicloud_security_group_rule" "allow_ssh_tcp" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "22/22"

priority = 1

security_group_id = alicloud_security_group.group.id

cidr_ip = "0.0.0.0/0"

}

resource "alicloud_instance" "instance_nacos_node" {

count = 3

availability_zone = "cn-guangzhou-a"

security_groups = alicloud_security_group.group.*.id

instance_type = "ecs.s6-c1m1.small"

system_disk_category = "cloud_essd"

system_disk_size = 40

image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

instance_name = "tf-nacos-cluster-nacos-${count.index}"

vswitch_id = alicloud_vswitch.vswitch.id

internet_max_bandwidth_out = 10

internet_charge_type = "PayByTraffic"

instance_charge_type = "PostPaid"

password = "1qaz@WSX@XXXXXXX"

}

resource "alicloud_instance" "instance_nginx_node" {

count = 1

availability_zone = "cn-guangzhou-a"

security_groups = alicloud_security_group.group.*.id

instance_type = "ecs.s6-c1m1.small"

system_disk_category = "cloud_essd"

system_disk_size = 40

image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

instance_name = "tf-nacos-cluster-nginx-${count.index}"

vswitch_id = alicloud_vswitch.vswitch.id

internet_max_bandwidth_out = 10

internet_charge_type = "PayByTraffic"

instance_charge_type = "PostPaid"

password = "1qaz@WSX@XXXXXXX"

}

resource "alicloud_instance" "instance_mysql_node" {

count = 1

availability_zone = "cn-guangzhou-a"

security_groups = alicloud_security_group.group.*.id

instance_type = "ecs.s6-c1m1.small"

system_disk_category = "cloud_essd"

system_disk_size = 40

image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

instance_name = "tf-nacos-cluster-mysql-${count.index}"

vswitch_id = alicloud_vswitch.vswitch.id

internet_max_bandwidth_out = 10

internet_charge_type = "PayByTraffic"

instance_charge_type = "PostPaid"

password = "1qaz@WSX@XXXXXXX"

}

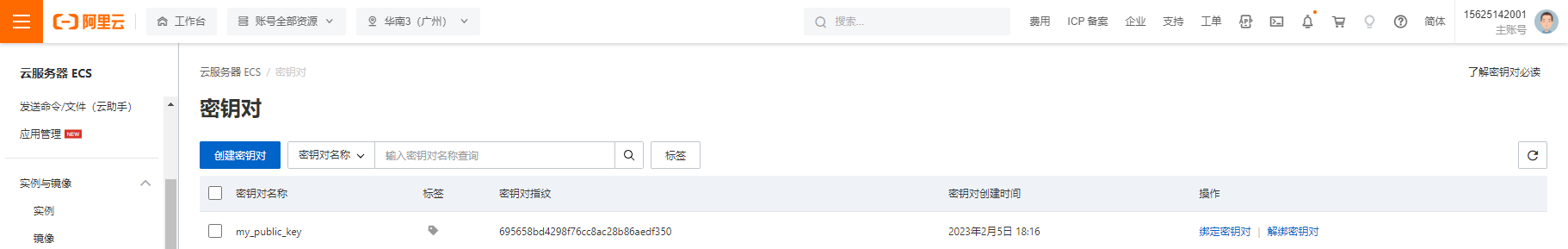

# 在阿里云创建本地root用户公钥

resource "alicloud_ecs_key_pair" "publickey" {

key_pair_name = "my_public_key"

public_key = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCvGLeFdDq4bxVbNmnSKWY+3gUMzs9rSmnHdHUxWZLUo4n980pFzQQ+UPytePnE+DSVES8Pl5KmdFuWsbbNEz7R6bO4lkKkOE2+HC/DwyhmpIM78PDSfFdf+WVIg7VLttIrhJJ6rcv/zdME3si/egXs0I9TfOJ/oO6nzkDjBBEwsvjES6lLs9MQdXa5wi/KAcL/p8OUIWEF8KhNUTQLLp0JxlcJhgx3U+ucn8yd9R2RqOBjdGJXk3rhgSqprAg73t0kt8BREOGoIqZq+e+RNz3/vaTV1yhra45Ni+vJDtTzYnZSQc0xSZU80ZYTSv80Y6AX+rH/4hp+yi4/ps4MOF8B root@xadocker-virtual-machine"

}

# 将上述创建的用户公钥配置到所创建的节点里

resource "alicloud_ecs_key_pair_attachment" "my_public_key" {

key_pair_name = "my_public_key"

instance_ids = concat(alicloud_instance.instance_nacos_node.*.id,alicloud_instance.instance_mysql_node.*.id,alicloud_instance.instance_nginx_node.*.id)

}

# 在本地执行渲染ansible的主机清单配置文件

resource "local_file" "ip_local_file" {

filename = "./ansible-deploy/ansible_inventory.ini"

content = templatefile("ansible_inventory.tpl",

{

port = 22,

user = "root",

password = "1qaz@WSX@XXXXXXX",

nacos_ip_addrs = alicloud_instance.instance_nacos_node.*.public_ip,

nginx_ip_addrs = alicloud_instance.instance_nginx_node.*.public_ip,

mysql_ip_addrs = alicloud_instance.instance_mysql_node.*.public_ip

})

}

resource "alicloud_alidns_record" "record" {

domain_name = "xadocker.cn"

rr = "nacos-dev"

type = "A"

value = alicloud_instance.instance_nginx_node.0.public_ip

remark = "create by tf for nacos nginx lb"

status = "ENABLE"

}

ansible主机清单模板

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible_inventory.tpl

[nacos]

%{ for addr in nacos_ip_addrs ~}

${addr} ansible_ssh_port=${port} ansible_ssh_user=${user}

%{ endfor ~}

[mysql]

%{ for addr in mysql_ip_addrs ~}

${addr} ansible_ssh_port=${port} ansible_ssh_user=${user}

%{ endfor ~}

[nginx]

%{ for addr in nginx_ip_addrs ~}

${addr} ansible_ssh_port=${port} ansible_ssh_user=${user}

%{ endfor ~}

此时目录

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster$ tree

.

├── ansible-deploy

├── ansible_inventory.tpl

├── main.tf

├── providers.tf

├── variables.tf

└── version.tf

1 directory, 5 files

terraform创建资源

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster$ terraform apply

data.alicloud_zones.default: Reading...

data.alicloud_zones.default: Read complete after 2s [id=2052605375]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# alicloud_alidns_record.record will be created

+ resource "alicloud_alidns_record" "record" {

+ domain_name = "xadocker.cn"

+ id = (known after apply)

+ line = "default"

+ remark = "create by tf for nacos nginx lb"

+ rr = "nacos-dev"

+ status = "ENABLE"

+ ttl = 600

+ type = "A"

+ value = (known after apply)

}

# alicloud_ecs_key_pair.publickey will be created

+ resource "alicloud_ecs_key_pair" "publickey" {

+ finger_print = (known after apply)

+ id = (known after apply)

+ key_name = (known after apply)

+ key_pair_name = "my_public_key"

+ public_key = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCvGLeFdDq4bxVbNmnSKWY+3gUMzs9rSmnHdHUxWZLUo4n980pFzQQ+UPytePnE+DSVES8Pl5KmdFuWsbbNEz7R6bO4lkKkOE2+HC/DwyhmpIM78PDSfFdf+WVIg7VLttIrhJJ6rcv/zdME3si/egXs0I9TfOJ/oO6nzkDjBBEwsvjES6lLs9MQdXa5wi/KAcL/p8OUIWEF8KhNUTQLLp0JxlcJhgx3U+ucn8yd9R2RqOBjdGJXk3rhgSqprAg73t0kt8BREOGoIqZq+e+RNz3/vaTV1yhra45Ni+vJDtTzYnZSQc0xSZU80ZYTSv80Y6AX+rH/4hp+yi4/ps4MOF8B root@xadocker-virtual-machine"

}

# alicloud_ecs_key_pair_attachment.my_public_key will be created

+ resource "alicloud_ecs_key_pair_attachment" "my_public_key" {

+ id = (known after apply)

+ instance_ids = (known after apply)

+ key_name = (known after apply)

+ key_pair_name = "my_public_key"

}

# alicloud_instance.instance_mysql_node[0] will be created

+ resource "alicloud_instance" "instance_mysql_node" {

+ availability_zone = "cn-guangzhou-a"

+ credit_specification = (known after apply)

+ deletion_protection = false

+ deployment_set_group_no = (known after apply)

+ dry_run = false

+ host_name = (known after apply)

+ id = (known after apply)

+ image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

+ instance_charge_type = "PostPaid"

+ instance_name = "tf-nacos-cluster-mysql-0"

+ instance_type = "ecs.s6-c1m1.small"

+ internet_charge_type = "PayByTraffic"

+ internet_max_bandwidth_in = (known after apply)

+ internet_max_bandwidth_out = 10

+ key_name = (known after apply)

+ password = (sensitive value)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ role_name = (known after apply)

+ secondary_private_ip_address_count = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ spot_strategy = "NoSpot"

+ status = (known after apply)

+ subnet_id = (known after apply)

+ system_disk_category = "cloud_essd"

+ system_disk_performance_level = (known after apply)

+ system_disk_size = 40

+ volume_tags = (known after apply)

+ vswitch_id = (known after apply)

}

# alicloud_instance.instance_nacos_node[0] will be created

+ resource "alicloud_instance" "instance_nacos_node" {

+ availability_zone = "cn-guangzhou-a"

+ credit_specification = (known after apply)

+ deletion_protection = false

+ deployment_set_group_no = (known after apply)

+ dry_run = false

+ host_name = (known after apply)

+ id = (known after apply)

+ image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

+ instance_charge_type = "PostPaid"

+ instance_name = "tf-nacos-cluster-nacos-0"

+ instance_type = "ecs.s6-c1m1.small"

+ internet_charge_type = "PayByTraffic"

+ internet_max_bandwidth_in = (known after apply)

+ internet_max_bandwidth_out = 10

+ key_name = (known after apply)

+ password = (sensitive value)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ role_name = (known after apply)

+ secondary_private_ip_address_count = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ spot_strategy = "NoSpot"

+ status = (known after apply)

+ subnet_id = (known after apply)

+ system_disk_category = "cloud_essd"

+ system_disk_performance_level = (known after apply)

+ system_disk_size = 40

+ volume_tags = (known after apply)

+ vswitch_id = (known after apply)

}

# alicloud_instance.instance_nacos_node[1] will be created

+ resource "alicloud_instance" "instance_nacos_node" {

+ availability_zone = "cn-guangzhou-a"

+ credit_specification = (known after apply)

+ deletion_protection = false

+ deployment_set_group_no = (known after apply)

+ dry_run = false

+ host_name = (known after apply)

+ id = (known after apply)

+ image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

+ instance_charge_type = "PostPaid"

+ instance_name = "tf-nacos-cluster-nacos-1"

+ instance_type = "ecs.s6-c1m1.small"

+ internet_charge_type = "PayByTraffic"

+ internet_max_bandwidth_in = (known after apply)

+ internet_max_bandwidth_out = 10

+ key_name = (known after apply)

+ password = (sensitive value)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ role_name = (known after apply)

+ secondary_private_ip_address_count = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ spot_strategy = "NoSpot"

+ status = (known after apply)

+ subnet_id = (known after apply)

+ system_disk_category = "cloud_essd"

+ system_disk_performance_level = (known after apply)

+ system_disk_size = 40

+ volume_tags = (known after apply)

+ vswitch_id = (known after apply)

}

# alicloud_instance.instance_nacos_node[2] will be created

+ resource "alicloud_instance" "instance_nacos_node" {

+ availability_zone = "cn-guangzhou-a"

+ credit_specification = (known after apply)

+ deletion_protection = false

+ deployment_set_group_no = (known after apply)

+ dry_run = false

+ host_name = (known after apply)

+ id = (known after apply)

+ image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

+ instance_charge_type = "PostPaid"

+ instance_name = "tf-nacos-cluster-nacos-2"

+ instance_type = "ecs.s6-c1m1.small"

+ internet_charge_type = "PayByTraffic"

+ internet_max_bandwidth_in = (known after apply)

+ internet_max_bandwidth_out = 10

+ key_name = (known after apply)

+ password = (sensitive value)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ role_name = (known after apply)

+ secondary_private_ip_address_count = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ spot_strategy = "NoSpot"

+ status = (known after apply)

+ subnet_id = (known after apply)

+ system_disk_category = "cloud_essd"

+ system_disk_performance_level = (known after apply)

+ system_disk_size = 40

+ volume_tags = (known after apply)

+ vswitch_id = (known after apply)

}

# alicloud_instance.instance_nginx_node[0] will be created

+ resource "alicloud_instance" "instance_nginx_node" {

+ availability_zone = "cn-guangzhou-a"

+ credit_specification = (known after apply)

+ deletion_protection = false

+ deployment_set_group_no = (known after apply)

+ dry_run = false

+ host_name = (known after apply)

+ id = (known after apply)

+ image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

+ instance_charge_type = "PostPaid"

+ instance_name = "tf-nacos-cluster-nginx-0"

+ instance_type = "ecs.s6-c1m1.small"

+ internet_charge_type = "PayByTraffic"

+ internet_max_bandwidth_in = (known after apply)

+ internet_max_bandwidth_out = 10

+ key_name = (known after apply)

+ password = (sensitive value)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ role_name = (known after apply)

+ secondary_private_ip_address_count = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ spot_strategy = "NoSpot"

+ status = (known after apply)

+ subnet_id = (known after apply)

+ system_disk_category = "cloud_essd"

+ system_disk_performance_level = (known after apply)

+ system_disk_size = 40

+ volume_tags = (known after apply)

+ vswitch_id = (known after apply)

}

# alicloud_security_group.group will be created

+ resource "alicloud_security_group" "group" {

+ description = "nacos-cluster"

+ id = (known after apply)

+ inner_access = (known after apply)

+ inner_access_policy = (known after apply)

+ name = "tf-nacos-cluster"

+ security_group_type = "normal"

+ vpc_id = (known after apply)

}

# alicloud_security_group_rule.allow_nacos_tcp will be created

+ resource "alicloud_security_group_rule" "allow_nacos_tcp" {

+ cidr_ip = "0.0.0.0/0"

+ id = (known after apply)

+ ip_protocol = "tcp"

+ nic_type = "intranet"

+ policy = "accept"

+ port_range = "8848/8848"

+ prefix_list_id = (known after apply)

+ priority = 1

+ security_group_id = (known after apply)

+ type = "ingress"

}

# alicloud_security_group_rule.allow_nginx_tcp will be created

+ resource "alicloud_security_group_rule" "allow_nginx_tcp" {

+ cidr_ip = "0.0.0.0/0"

+ id = (known after apply)

+ ip_protocol = "tcp"

+ nic_type = "intranet"

+ policy = "accept"

+ port_range = "80/80"

+ prefix_list_id = (known after apply)

+ priority = 1

+ security_group_id = (known after apply)

+ type = "ingress"

}

# alicloud_security_group_rule.allow_ssh_tcp will be created

+ resource "alicloud_security_group_rule" "allow_ssh_tcp" {

+ cidr_ip = "0.0.0.0/0"

+ id = (known after apply)

+ ip_protocol = "tcp"

+ nic_type = "intranet"

+ policy = "accept"

+ port_range = "22/22"

+ prefix_list_id = (known after apply)

+ priority = 1

+ security_group_id = (known after apply)

+ type = "ingress"

}

# alicloud_vpc.vpc will be created

+ resource "alicloud_vpc" "vpc" {

+ cidr_block = "172.16.0.0/16"

+ id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ name = (known after apply)

+ resource_group_id = (known after apply)

+ route_table_id = (known after apply)

+ router_id = (known after apply)

+ router_table_id = (known after apply)

+ status = (known after apply)

+ vpc_name = "tf-cluster-paas"

}

# alicloud_vswitch.vswitch will be created

+ resource "alicloud_vswitch" "vswitch" {

+ availability_zone = (known after apply)

+ cidr_block = "172.16.0.0/24"

+ id = (known after apply)

+ name = (known after apply)

+ status = (known after apply)

+ vpc_id = (known after apply)

+ vswitch_name = "tf-cluster-paas"

+ zone_id = "cn-guangzhou-a"

}

# local_file.ip_local_file will be created

+ resource "local_file" "ip_local_file" {

+ content = (known after apply)

+ directory_permission = "0777"

+ file_permission = "0777"

+ filename = "./ansible-deploy/ansible_inventory.ini"

+ id = (known after apply)

}

Plan: 15 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

alicloud_ecs_key_pair.publickey: Creating...

alicloud_vpc.vpc: Creating...

alicloud_ecs_key_pair.publickey: Creation complete after 4s [id=my_public_key]

alicloud_vpc.vpc: Creation complete after 9s [id=vpc-7xv4dqz2ofevofcr0u9t9]

alicloud_security_group.group: Creating...

alicloud_vswitch.vswitch: Creating...

alicloud_security_group.group: Creation complete after 3s [id=sg-7xvb1hvto3njfp382kie]

alicloud_security_group_rule.allow_nacos_tcp: Creating...

alicloud_security_group_rule.allow_ssh_tcp: Creating...

alicloud_security_group_rule.allow_nginx_tcp: Creating...

alicloud_security_group_rule.allow_nginx_tcp: Creation complete after 1s [id=sg-7xvb1hvto3njfp382kie:ingress:tcp:80/80:intranet:0.0.0.0/0:accept:1]

alicloud_security_group_rule.allow_ssh_tcp: Creation complete after 1s [id=sg-7xvb1hvto3njfp382kie:ingress:tcp:22/22:intranet:0.0.0.0/0:accept:1]

alicloud_security_group_rule.allow_nacos_tcp: Creation complete after 1s [id=sg-7xvb1hvto3njfp382kie:ingress:tcp:8848/8848:intranet:0.0.0.0/0:accept:1]

alicloud_vswitch.vswitch: Creation complete after 7s [id=vsw-7xvj9glllhbli978o9uax]

alicloud_instance.instance_nginx_node[0]: Creating...

alicloud_instance.instance_nacos_node[2]: Creating...

alicloud_instance.instance_nacos_node[1]: Creating...

alicloud_instance.instance_nacos_node[0]: Creating...

alicloud_instance.instance_mysql_node[0]: Creating...

alicloud_instance.instance_nginx_node[0]: Still creating... [10s elapsed]

alicloud_instance.instance_nacos_node[2]: Still creating... [10s elapsed]

alicloud_instance.instance_nacos_node[1]: Still creating... [10s elapsed]

alicloud_instance.instance_nacos_node[0]: Still creating... [10s elapsed]

alicloud_instance.instance_mysql_node[0]: Still creating... [10s elapsed]

alicloud_instance.instance_nginx_node[0]: Creation complete after 12s [id=i-7xva1zlkypri0k4mnhk4]

alicloud_alidns_record.record: Creating...

alicloud_instance.instance_nacos_node[2]: Creation complete after 12s [id=i-7xv9q6jzyllqy6i7siy3]

alicloud_instance.instance_nacos_node[0]: Creation complete after 13s [id=i-7xv6zscdflf8id3uo7uj]

alicloud_instance.instance_mysql_node[0]: Creation complete after 13s [id=i-7xvbf7kvtsy3s0fv9lb6]

alicloud_instance.instance_nacos_node[1]: Creation complete after 13s [id=i-7xvbf7kvtsy3s0fv9lb5]

alicloud_ecs_key_pair_attachment.my_public_key: Creating...

local_file.ip_local_file: Creating...

local_file.ip_local_file: Creation complete after 0s [id=85bc2c6270a86991b774446140bf145ba89f11fc]

alicloud_ecs_key_pair_attachment.my_public_key: Creation complete after 1s [id=my_public_key:["i-7xva1zlkypri0k4mnhk4","i-7xv6zscdflf8id3uo7uj","i-7xvbf7kvtsy3s0fv9lb6","i-7xv9q6jzyllqy6i7siy3","i-7xvbf7kvtsy3s0fv9lb5"]]

alicloud_alidns_record.record: Creation complete after 2s [id=811113556473707520]

Apply complete! Resources: 15 added, 0 changed, 0 destroyed.

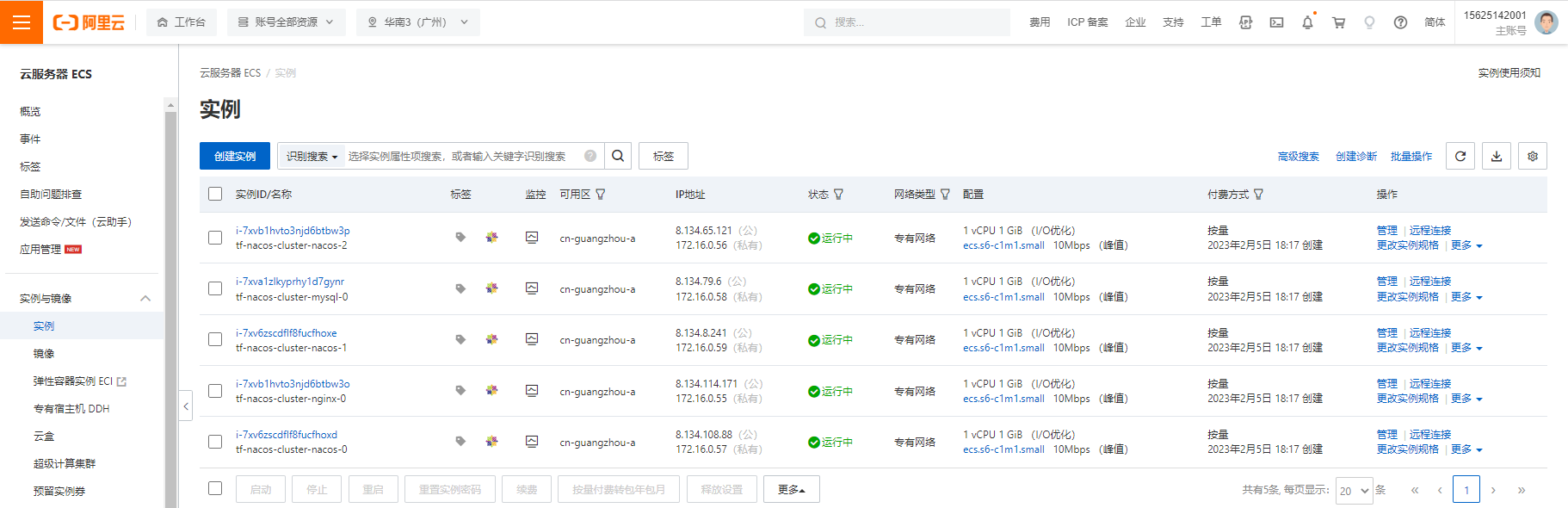

此时资源已创建完成,可以登录云上核实资源

# ansible主机清单文件

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/ansible_inventory.ini

[nacos]

8.134.108.88 ansible_ssh_port=22 ansible_ssh_user=root

8.134.8.241 ansible_ssh_port=22 ansible_ssh_user=root

8.134.65.121 ansible_ssh_port=22 ansible_ssh_user=root

[mysql]

8.134.79.6 ansible_ssh_port=22 ansible_ssh_user=root

[nginx]

8.134.114.171 ansible_ssh_port=22 ansible_ssh_user=rootansible配置集群

此处博主没有用roles去配置,因为东西不多,直接在一个剧本里整完了所有。。。

playbook剧本

注意:java包和nacos包博主已提前放置oss内,读者自行准备好~

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/nacos-playbook.yaml

---

- name: install nacos

hosts: all

vars:

mysql_root_password: 1qaz@WSX@NNNN

nacos_mysql_db: nacos

nacos_mysql_user: nacos

nacos_mysql_password: naCos@1NNNN

nacos_xms_size: 756m

nacos_xmx_size: 756m

nacos_xmn_size: 256m

nacos_nginx_server_name: nacos-dev.xadocker.cn

tasks:

- name: get java rpm

when: inventory_hostname in groups['nacos']

get_url:

url: 'https://xadocker-resource.oss-cn-guangzhou-internal.aliyuncs.com/jdk-8u351-linux-x64.rpm'

dest: /usr/local/src/jdk-8u351-linux-x64.rpm

- name: install java

when: inventory_hostname in groups['nacos']

yum:

name: /usr/local/src/jdk-8u351-linux-x64.rpm

state: present

- name: get nacos zip

when: inventory_hostname in groups['nacos']

get_url:

#url: https://github.com/alibaba/nacos/releases/download/1.4.4/nacos-server-1.4.4.tar.gz

url: https://xadocker-resource.oss-cn-guangzhou-internal.aliyuncs.com/nacos-server-1.4.4.tar.gz

dest: /usr/local/src/nacos-server-1.4.4.tar.gz

- name: unarchive nacos

when: inventory_hostname in groups['nacos']

unarchive:

src: /usr/local/src/nacos-server-1.4.4.tar.gz

dest: /usr/local

remote_src: yes

- name: render nacos config

when: inventory_hostname in groups['nacos']

template: src={{ item.src }} dest={{ item.dest }}

with_items:

- { src: "./application.properties.j2", dest: "/usr/local/nacos/conf/application.properties" }

- { src: "./cluster.conf.j2", dest: "/usr/local/nacos/conf/cluster.conf" }

- { src: "./startup.sh", dest: "/usr/local/nacos/bin/startup.sh" }

- name: Installed Nginx repo

when: inventory_hostname in groups['nginx']

yum_repository:

name: nginx

description: nginx

baseurl: http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck: no

- name: install nginx

when: inventory_hostname in groups['nginx']

yum:

name: nginx

state: present

- name: render nginx lb config for nacos

when: inventory_hostname in groups['nginx']

template:

src: ./nginx-nacos.conf.j2

dest: /etc/nginx/conf.d/nginx-nacos.conf

- name: start nginx

when: inventory_hostname in groups['nginx']

systemd:

name: nginx

state: started

enabled: yes

- name: Installed mysql repo

when: inventory_hostname in groups['mysql']

yum_repository:

name: mysql57-community

description: mysql57-community

baseurl: http://repo.mysql.com/yum/mysql-5.7-community/el/7/$basearch/

gpgcheck: no

- name: install mysql

when: inventory_hostname in groups['mysql']

yum:

name: mysql-community-server

state: present

- name: start mysqld

when: inventory_hostname in groups['mysql']

systemd:

name: mysqld

state: started

enabled: yes

- name: get mysql init password

when: inventory_hostname in groups['mysql']

shell: grep 'password is generated' /var/log/mysqld.log | awk '{print $NF}'

register: mysql_init_password

- name: output mysql_init_password

when: inventory_hostname in groups['mysql']

debug:

msg: "{{ mysql_init_password.stdout }}"

- name: update mysql root password

shell: |

mysql --connect-expired-password -uroot -p"{{ mysql_init_password.stdout }}" -e "ALTER USER 'root'@'localhost' IDENTIFIED BY '{{ mysql_root_password }}'" && touch /tmp/update_mysql_root_password.txt

args:

creates: /tmp/update_mysql_root_password.txt

when: inventory_hostname in groups['mysql']

register: someoutput

- name: outputsome

when: inventory_hostname in groups['mysql']

debug:

msg: "{{ someoutput }}"

- name: copy nacos-mysql.sql

when: inventory_hostname in groups['mysql']

copy:

src: ./nacos-mysql.sql

dest: /usr/local/src/nacos-mysql.sql

- name: init db nacos

shell: |

mysql --connect-expired-password -uroot -p"{{ mysql_root_password }}" -e "CREATE DATABASE IF NOT EXISTS {{ nacos_mysql_db }} DEFAULT CHARACTER SET utf8mb4;"

mysql --connect-expired-password -uroot -p"{{ mysql_root_password }}" -e "grant all on {{ nacos_mysql_user }}.* to '{{ nacos_mysql_user }}'@'%' identified by '{{ nacos_mysql_password }}';"

mysql --connect-expired-password -u {{ nacos_mysql_user }} -p"{{ nacos_mysql_password }}" -B {{ nacos_mysql_db }} -e "source /usr/local/src/nacos-mysql.sql;" && touch /tmp/init_nacos_db.txt

args:

creates: /tmp/init_nacos_db.txt

when: inventory_hostname in groups['mysql']

- name: start nacos cluster

when: inventory_hostname in groups['nacos']

shell: /bin/bash startup.sh && touch /tmp/start_nacos_cluster.txt

args:

chdir: /usr/local/nacos/bin

creates: /tmp/start_nacos_cluster.txt

nginx负载配置模板

- 通过

groups['nacos']取得nacos组内对象 - 通过

hostvars[i]['ansible_default_ipv4']['address']获取nacos组的内网地址

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/nginx-nacos.conf.j2

upstream nacos {

{% for i in groups['nacos'] %}

server {{ hostvars[i]['ansible_default_ipv4']['address'] }}:8848;

{% endfor %}

}

server {

listen 80;

server_name {{ nacos_nginx_server_name }};

access_log /var/log/nginx/{{ nacos_nginx_server_name }}-access.log;

error_log /var/log/nginx/{{ nacos_nginx_server_name }}-error.log;

location / {

proxy_pass http://nacos;

proxy_redirect off;

proxy_set_header Host $host:$server_port;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Is_EDU 0;

}

}

nacos配置模板

- 通过

groups['mysql'][0]获取mysql组第一个节点主机 - 最后通过

hostvars[groups['mysql'][0]]['ansible_default_ipv4']['address']取得第一个节点内网地址

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ egrep -v '^#|^$' ansible-deploy/application.properties.j2

server.servlet.contextPath=/nacos

server.port=8848

spring.datasource.platform=mysql

db.num=1

db.url.0=jdbc:mysql://{{ hostvars[groups['mysql'][0]]['ansible_default_ipv4']['address'] }}:3306/{{ nacos_mysql_db }}?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC

db.user.0={{ nacos_mysql_user }}

db.password.0={{ nacos_mysql_password }}

db.pool.config.connectionTimeout=30000

db.pool.config.validationTimeout=10000

db.pool.config.maximumPoolSize=20

db.pool.config.minimumIdle=2

nacos.naming.empty-service.auto-clean=true

nacos.naming.empty-service.clean.initial-delay-ms=50000

nacos.naming.empty-service.clean.period-time-ms=30000

management.endpoints.web.exposure.include=*

management.metrics.export.elastic.enabled=false

management.metrics.export.influx.enabled=false

server.tomcat.accesslog.enabled=true

server.tomcat.accesslog.pattern=%h %l %u %t "%r" %s %b %D %{User-Agent}i %{Request-Source}i

server.tomcat.basedir=file:.

nacos.security.ignore.urls=/,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-ui/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/**

nacos.core.auth.system.type=nacos

nacos.core.auth.enabled=false

nacos.core.auth.default.token.expire.seconds=18000

nacos.core.auth.default.token.secret.key=SecretKey012345678901234567890123456789012345678901234567890123456789

nacos.core.auth.caching.enabled=true

nacos.core.auth.enable.userAgentAuthWhite=true

nacos.core.auth.server.identity.key=

nacos.core.auth.server.identity.value=

nacos.istio.mcp.server.enabled=false

nacos启动脚本配置模板

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/startup.sh

#!/bin/bash

# Copyright 1999-2018 Alibaba Group Holding Ltd.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

cygwin=false

darwin=false

os400=false

case "`uname`" in

CYGWIN*) cygwin=true;;

Darwin*) darwin=true;;

OS400*) os400=true;;

esac

error_exit ()

{

echo "ERROR: $1 !!"

exit 1

}

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=$HOME/jdk/java

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/usr/java

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/opt/taobao/java

[ ! -e "$JAVA_HOME/bin/java" ] && unset JAVA_HOME

if [ -z "$JAVA_HOME" ]; then

if $darwin; then

if [ -x '/usr/libexec/java_home' ] ; then

export JAVA_HOME=`/usr/libexec/java_home`

elif [ -d "/System/Library/Frameworks/JavaVM.framework/Versions/CurrentJDK/Home" ]; then

export JAVA_HOME="/System/Library/Frameworks/JavaVM.framework/Versions/CurrentJDK/Home"

fi

else

JAVA_PATH=`dirname $(readlink -f $(which javac))`

if [ "x$JAVA_PATH" != "x" ]; then

export JAVA_HOME=`dirname $JAVA_PATH 2>/dev/null`

fi

fi

if [ -z "$JAVA_HOME" ]; then

error_exit "Please set the JAVA_HOME variable in your environment, We need java(x64)! jdk8 or later is better!"

fi

fi

export SERVER="nacos-server"

export MODE="cluster"

export FUNCTION_MODE="all"

export MEMBER_LIST=""

export EMBEDDED_STORAGE=""

while getopts ":m:f:s:c:p:" opt

do

case $opt in

m)

MODE=$OPTARG;;

f)

FUNCTION_MODE=$OPTARG;;

s)

SERVER=$OPTARG;;

c)

MEMBER_LIST=$OPTARG;;

p)

EMBEDDED_STORAGE=$OPTARG;;

?)

echo "Unknown parameter"

exit 1;;

esac

done

export JAVA_HOME

export JAVA="$JAVA_HOME/bin/java"

export BASE_DIR=`cd $(dirname $0)/..; pwd`

export CUSTOM_SEARCH_LOCATIONS=file:${BASE_DIR}/conf/

#===========================================================================================

# JVM Configuration

#===========================================================================================

if [[ "${MODE}" == "standalone" ]]; then

JAVA_OPT="${JAVA_OPT} -Xms512m -Xmx512m -Xmn256m"

JAVA_OPT="${JAVA_OPT} -Dnacos.standalone=true"

else

if [[ "${EMBEDDED_STORAGE}" == "embedded" ]]; then

JAVA_OPT="${JAVA_OPT} -DembeddedStorage=true"

fi

JAVA_OPT="${JAVA_OPT} -server -Xms{{ nacos_xms_size }} -Xmx{{ nacos_xmx_size }} -Xmn{{ nacos_xmn_size }} -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m"

JAVA_OPT="${JAVA_OPT} -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=${BASE_DIR}/logs/java_heapdump.hprof"

JAVA_OPT="${JAVA_OPT} -XX:-UseLargePages"

fi

if [[ "${FUNCTION_MODE}" == "config" ]]; then

JAVA_OPT="${JAVA_OPT} -Dnacos.functionMode=config"

elif [[ "${FUNCTION_MODE}" == "naming" ]]; then

JAVA_OPT="${JAVA_OPT} -Dnacos.functionMode=naming"

fi

JAVA_OPT="${JAVA_OPT} -Dnacos.member.list=${MEMBER_LIST}"

JAVA_MAJOR_VERSION=$($JAVA -version 2>&1 | sed -E -n 's/.* version "([0-9]*).*$/\1/p')

if [[ "$JAVA_MAJOR_VERSION" -ge "9" ]] ; then

JAVA_OPT="${JAVA_OPT} -Xlog:gc*:file=${BASE_DIR}/logs/nacos_gc.log:time,tags:filecount=10,filesize=102400"

else

JAVA_OPT="${JAVA_OPT} -Djava.ext.dirs=${JAVA_HOME}/jre/lib/ext:${JAVA_HOME}/lib/ext"

JAVA_OPT="${JAVA_OPT} -Xloggc:${BASE_DIR}/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M"

fi

JAVA_OPT="${JAVA_OPT} -Dloader.path=${BASE_DIR}/plugins/health,${BASE_DIR}/plugins/cmdb"

JAVA_OPT="${JAVA_OPT} -Dnacos.home=${BASE_DIR}"

JAVA_OPT="${JAVA_OPT} -jar ${BASE_DIR}/target/${SERVER}.jar"

JAVA_OPT="${JAVA_OPT} ${JAVA_OPT_EXT}"

JAVA_OPT="${JAVA_OPT} --spring.config.additional-location=${CUSTOM_SEARCH_LOCATIONS}"

JAVA_OPT="${JAVA_OPT} --logging.config=${BASE_DIR}/conf/nacos-logback.xml"

JAVA_OPT="${JAVA_OPT} --server.max-http-header-size=524288"

if [ ! -d "${BASE_DIR}/logs" ]; then

mkdir ${BASE_DIR}/logs

fi

echo "$JAVA ${JAVA_OPT}"

if [[ "${MODE}" == "standalone" ]]; then

echo "nacos is starting with standalone"

else

echo "nacos is starting with cluster"

fi

# check the start.out log output file

if [ ! -f "${BASE_DIR}/logs/start.out" ]; then

touch "${BASE_DIR}/logs/start.out"

fi

# start

echo "$JAVA ${JAVA_OPT}" > ${BASE_DIR}/logs/start.out 2>&1 &

nohup $JAVA ${JAVA_OPT} nacos.nacos >> ${BASE_DIR}/logs/start.out 2>&1 &

echo "nacos is starting,you can check the ${BASE_DIR}/logs/start.out"

nacos集群配置模板

xadocker@xadocker-virtual-machine:~/Desktop/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/cluster.conf.j2

#

# Copyright 1999-2018 Alibaba Group Holding Ltd.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

#it is ip

#example

{% for i in groups['nacos'] %}

{{ hostvars[i]['ansible_default_ipv4']['address'] }}:8848

{% endfor %}

ansible配置

自行准备好私钥id_rsa文件

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster$ cat ansible-deploy/ansible.cfg

[defaults]

inventory=./ansible_inventory.ini

host_key_checking=False

private_key_file=./id_rsa

remote_user=root

[privilege_escalation]

become=false

become_method=sudo

become_user=root

become_ask_pass=false

此时目录结构

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster$ tree

.

├── ansible-deploy

│ ├── ansible.cfg

│ ├── ansible_inventory.ini

│ ├── application.properties.j2

│ ├── cluster.conf.j2

│ ├── id_rsa

│ ├── nacos-mysql.sql

│ ├── nacos-playbook.yaml

│ ├── nginx-nacos.conf.j2

│ └── startup.sh

├── ansible_inventory.tpl

├── main.tf

├── providers.tf

├── variables.tf

└── version.tf

1 directory, 14 filesplaybook剧本运行

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster$ cd ansible-deploy/

xadocker@xadocker-virtual-machine:~/workdir/datadir/terraform/tf-nacos-cluster/ansible-deploy$ sudo ansible-playbook nacos-playbook.yaml

PLAY [install nacos] *************************************************************************************************************************************************************************

TASK [Gathering Facts] ***********************************************************************************************************************************************************************

ok: [8.134.114.171]

ok: [8.134.79.6]

ok: [8.134.108.88]

ok: [8.134.8.241]

ok: [8.134.65.121]

TASK [get java rpm] **************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.79.6]

changed: [8.134.108.88]

changed: [8.134.8.241]

changed: [8.134.65.121]

TASK [install java] **************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.79.6]

changed: [8.134.65.121]

changed: [8.134.108.88]

changed: [8.134.8.241]

TASK [get nacos zip] *************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.79.6]

changed: [8.134.8.241]

changed: [8.134.65.121]

changed: [8.134.108.88]

TASK [unarchive nacos] ***********************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.79.6]

changed: [8.134.65.121]

changed: [8.134.108.88]

changed: [8.134.8.241]

TASK [render nacos config] *******************************************************************************************************************************************************************

skipping: [8.134.114.171] => (item={u'dest': u'/usr/local/nacos/conf/application.properties', u'src': u'./application.properties.j2'})

skipping: [8.134.114.171] => (item={u'dest': u'/usr/local/nacos/conf/cluster.conf', u'src': u'./cluster.conf.j2'})

skipping: [8.134.114.171] => (item={u'dest': u'/usr/local/nacos/bin/startup.sh', u'src': u'./startup.sh'})

skipping: [8.134.79.6] => (item={u'dest': u'/usr/local/nacos/conf/application.properties', u'src': u'./application.properties.j2'})

skipping: [8.134.79.6] => (item={u'dest': u'/usr/local/nacos/conf/cluster.conf', u'src': u'./cluster.conf.j2'})

skipping: [8.134.79.6] => (item={u'dest': u'/usr/local/nacos/bin/startup.sh', u'src': u'./startup.sh'})

changed: [8.134.108.88] => (item={u'dest': u'/usr/local/nacos/conf/application.properties', u'src': u'./application.properties.j2'})

changed: [8.134.65.121] => (item={u'dest': u'/usr/local/nacos/conf/application.properties', u'src': u'./application.properties.j2'})

changed: [8.134.8.241] => (item={u'dest': u'/usr/local/nacos/conf/application.properties', u'src': u'./application.properties.j2'})

changed: [8.134.65.121] => (item={u'dest': u'/usr/local/nacos/conf/cluster.conf', u'src': u'./cluster.conf.j2'})

changed: [8.134.108.88] => (item={u'dest': u'/usr/local/nacos/conf/cluster.conf', u'src': u'./cluster.conf.j2'})

changed: [8.134.8.241] => (item={u'dest': u'/usr/local/nacos/conf/cluster.conf', u'src': u'./cluster.conf.j2'})

changed: [8.134.65.121] => (item={u'dest': u'/usr/local/nacos/bin/startup.sh', u'src': u'./startup.sh'})

changed: [8.134.108.88] => (item={u'dest': u'/usr/local/nacos/bin/startup.sh', u'src': u'./startup.sh'})

changed: [8.134.8.241] => (item={u'dest': u'/usr/local/nacos/bin/startup.sh', u'src': u'./startup.sh'})

TASK [Installed Nginx repo] ******************************************************************************************************************************************************************

skipping: [8.134.79.6]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.114.171]

TASK [install nginx] *************************************************************************************************************************************************************************

skipping: [8.134.79.6]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.114.171]

TASK [render nginx lb config for nacos] ******************************************************************************************************************************************************

skipping: [8.134.79.6]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.114.171]

TASK [start nginx] ***************************************************************************************************************************************************************************

skipping: [8.134.79.6]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.114.171]

TASK [Installed mysql repo] ******************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [install mysql] *************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [start mysqld] **************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [get mysql init password] ***************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [output mysql_init_password] ************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

ok: [8.134.79.6] => {

"msg": "stoyS#f+m6f;"

}

TASK [update mysql root password] ************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [outputsome] ****************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

ok: [8.134.79.6] => {

"msg": {

"changed": true,

"cmd": "mysql --connect-expired-password -uroot -p\"stoyS#f+m6f;\" -e \"ALTER USER 'root'@'localhost' IDENTIFIED BY '1qaz@WSX@NNNN'\" && touch /tmp/update_mysql_root_password.txt",

"delta": "0:00:00.055281",

"end": "2023-02-05 18:27:51.766298",

"failed": false,

"rc": 0,

"start": "2023-02-05 18:27:51.711017",

"stderr": "mysql: [Warning] Using a password on the command line interface can be insecure.",

"stderr_lines": [

"mysql: [Warning] Using a password on the command line interface can be insecure."

],

"stdout": "",

"stdout_lines": []

}

}

skipping: [8.134.8.241]

skipping: [8.134.65.121]

TASK [copy nacos-mysql.sql] ******************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [init db nacos] *************************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.108.88]

skipping: [8.134.8.241]

skipping: [8.134.65.121]

changed: [8.134.79.6]

TASK [start nacos cluster] *******************************************************************************************************************************************************************

skipping: [8.134.114.171]

skipping: [8.134.79.6]

changed: [8.134.65.121]

changed: [8.134.108.88]

changed: [8.134.8.241]

PLAY RECAP ***********************************************************************************************************************************************************************************

8.134.108.88 : ok=7 changed=6 unreachable=0 failed=0

8.134.114.171 : ok=5 changed=4 unreachable=0 failed=0

8.134.65.121 : ok=7 changed=6 unreachable=0 failed=0

8.134.79.6 : ok=10 changed=7 unreachable=0 failed=0

8.134.8.241 : ok=7 changed=6 unreachable=0 failed=0

后续调整

- terraform资源配置调整

- 创建无公网ip ECS,使得nacos集群限制在内网即可,此处有公网ip是因为博主在外网测试使用

- ansible剧本调整

- 使用block去除掉大量的when判断,直接点用roles方式配置~

后续也可以将此工程目录托管至gitlab中,配合jenkins进行 giops 管理

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站