共计 23121 个字符,预计需要花费 58 分钟才能阅读完成。

kafka是一个分布式的流处理平台,如何在k8s中运行一个呢?

使用terraform+ansiablie快速创建一个k8s集群

[root@master-0 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-0 Ready control-plane,master 8m41s v1.22.15

worker-0 Ready <none> 6m12s v1.22.15

worker-1 Ready <none> 5m32s v1.22.15

worker-2 Ready <none> 5m2s v1.22.15存储类的选择

对于存储类,以往博主会用nfs等网络存储来提供,但是性能方面没有本地盘高,所以还是选择使用本地存储类来提供存储,对于这种分布式的中间件,数据同步则通过其自身的特性来提供是再好不过了

local-path存储类

[root@master-0 ~]# kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

[root@master-0 ~]# cat local-path-storage.yaml

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [ "" ]

resources: [ "nodes", "persistentvolumeclaims", "configmaps" ]

verbs: [ "get", "list", "watch" ]

- apiGroups: [ "" ]

resources: [ "endpoints", "persistentvolumes", "pods" ]

verbs: [ "*" ]

- apiGroups: [ "" ]

resources: [ "events" ]

verbs: [ "create", "patch" ]

- apiGroups: [ "storage.k8s.io" ]

resources: [ "storageclasses" ]

verbs: [ "get", "list", "watch" ]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:master-head

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

setup: |-

#!/bin/sh

set -eu

mkdir -m 0777 -p "$VOL_DIR"

teardown: |-

#!/bin/sh

set -eu

rm -rf "$VOL_DIR"

helperPod.yaml: |-

apiVersion: v1

kind: Pod

metadata:

name: helper-pod

spec:

containers:

- name: helper-pod

image: busybox

imagePullPolicy: IfNotPresentkafka部署

operator部署

kafka的operator比较流行的有两种,此处使用strimiz-operator来运行

# 创建ns

[root@master-0 ~]# kubectl create namespace kafka

# 部署operator

[root@master-0 ~]# wget --no-check-certificate 'https://strimzi.io/install/latest?namespace=kafka' -O install.yaml

[root@master-0 ~]# kubectl apply -f install.yaml

[root@master-0 ~]# kubectl get pods -n kafka

NAME READY STATUS RESTARTS AGE

strimzi-cluster-operator-7b4f8f8496-gsnt5 1/1 Running 0 3m55s

[root@master-0 ~]#

[root@master-0 ~]# kubectl get all -n kafka

NAME READY STATUS RESTARTS AGE

pod/strimzi-cluster-operator-7b4f8f8496-gsnt5 1/1 Running 0 4m2s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/strimzi-cluster-operator 1/1 1 1 4m2s

NAME DESIRED CURRENT READY AGE

replicaset.apps/strimzi-cluster-operator-7b4f8f8496 1 1 1 4m2s

kafka部署

创建一个本地盘持久化的kafka集群

# 下载持久化模板

[root@master-0 ~]# wget --no-check-certificate https://strimzi.io/examples/latest/kafka/kafka-persistent-single.yaml

# 调整该样例文件并接入prometheus监控

[root@master-0 ~]# cat kafka-persistent-single.yaml

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: my-cluster

namespace: kafka

spec:

kafka:

version: 3.4.0

replicas: 3

listeners:

- name: plain

port: 9092

type: internal

tls: false

- name: tls

port: 9093

type: internal

tls: true

config:

offsets.topic.replication.factor: 3

transaction.state.log.replication.factor: 3

transaction.state.log.min.isr: 1

default.replication.factor: 3

min.insync.replicas: 1

inter.broker.protocol.version: "3.4"

storage:

type: jbod

volumes:

- id: 0

type: persistent-claim

class: local-path

size: 30Gi

deleteClaim: false

metricsConfig:

type: jmxPrometheusExporter

valueFrom:

configMapKeyRef:

name: kafka-metrics

key: kafka-metrics-config.yml

zookeeper:

replicas: 1

storage:

type: persistent-claim

size: 30Gi

class: local-path

deleteClaim: false

metricsConfig:

type: jmxPrometheusExporter

valueFrom:

configMapKeyRef:

name: kafka-metrics

key: zookeeper-metrics-config.yml

entityOperator:

topicOperator: {}

userOperator: {}

kafkaExporter:

topicRegex: ".*"

groupRegex: ".*"

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kafka-metrics

namespace: kafka # 这里要加上命名空间

labels:

app: strimzi

data:

kafka-metrics-config.yml: |

# See https://github.com/prometheus/jmx_exporter for more info about JMX Prometheus Exporter metrics

lowercaseOutputName: true

rules:

# Special cases and very specific rules

- pattern: kafka.server<type=(.+), name=(.+), clientId=(.+), topic=(.+), partition=(.*)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

topic: "$4"

partition: "$5"

- pattern: kafka.server<type=(.+), name=(.+), clientId=(.+), brokerHost=(.+), brokerPort=(.+)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

broker: "$4:$5"

- pattern: kafka.server<type=(.+), cipher=(.+), protocol=(.+), listener=(.+), networkProcessor=(.+)><>connections

name: kafka_server_$1_connections_tls_info

type: GAUGE

labels:

cipher: "$2"

protocol: "$3"

listener: "$4"

networkProcessor: "$5"

- pattern: kafka.server<type=(.+), clientSoftwareName=(.+), clientSoftwareVersion=(.+), listener=(.+), networkProcessor=(.+)><>connections

name: kafka_server_$1_connections_software

type: GAUGE

labels:

clientSoftwareName: "$2"

clientSoftwareVersion: "$3"

listener: "$4"

networkProcessor: "$5"

- pattern: "kafka.server<type=(.+), listener=(.+), networkProcessor=(.+)><>(.+):"

name: kafka_server_$1_$4

type: GAUGE

labels:

listener: "$2"

networkProcessor: "$3"

- pattern: kafka.server<type=(.+), listener=(.+), networkProcessor=(.+)><>(.+)

name: kafka_server_$1_$4

type: GAUGE

labels:

listener: "$2"

networkProcessor: "$3"

# Some percent metrics use MeanRate attribute

# Ex) kafka.server<type=(KafkaRequestHandlerPool), name=(RequestHandlerAvgIdlePercent)><>MeanRate

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>MeanRate

name: kafka_$1_$2_$3_percent

type: GAUGE

# Generic gauges for percents

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*, (.+)=(.+)><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

labels:

"$4": "$5"

# Generic per-second counters with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

# Generic gauges with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

# Emulate Prometheus 'Summary' metrics for the exported 'Histogram's.

# Note that these are missing the '_sum' metric!

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*), (.+)=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

quantile: "0.$8"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

quantile: "0.$6"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

quantile: "0.$4"

zookeeper-metrics-config.yml: |

# See https://github.com/prometheus/jmx_exporter for more info about JMX Prometheus Exporter metrics

lowercaseOutputName: true

rules:

# replicated Zookeeper

- pattern: "org.apache.ZooKeeperService<name0=ReplicatedServer_id(\\d+)><>(\\w+)"

name: "zookeeper_$2"

type: GAUGE

- pattern: "org.apache.ZooKeeperService<name0=ReplicatedServer_id(\\d+), name1=replica.(\\d+)><>(\\w+)"

name: "zookeeper_$3"

type: GAUGE

labels:

replicaId: "$2"

- pattern: "org.apache.ZooKeeperService<name0=ReplicatedServer_id(\\d+), name1=replica.(\\d+), name2=(\\w+)><>(Packets\\w+)"

name: "zookeeper_$4"

type: COUNTER

labels:

replicaId: "$2"

memberType: "$3"

- pattern: "org.apache.ZooKeeperService<name0=ReplicatedServer_id(\\d+), name1=replica.(\\d+), name2=(\\w+)><>(\\w+)"

name: "zookeeper_$4"

type: GAUGE

labels:

replicaId: "$2"

memberType: "$3"

- pattern: "org.apache.ZooKeeperService<name0=ReplicatedServer_id(\\d+), name1=replica.(\\d+), name2=(\\w+), name3=(\\w+)><>(\\w+)"

name: "zookeeper_$4_$5"

type: GAUGE

labels:

replicaId: "$2"

memberType: "$3"

[root@master-0 ~]# kubectl apply -f kafka-persistent-single.yaml

kafka.kafka.strimzi.io/my-cluster created

configmap/kafka-metrics created

[root@master-0 ~]# kubectl get all -n kafka

NAME READY STATUS RESTARTS AGE

pod/my-cluster-entity-operator-8bd4548d9-vg6x2 2/3 Running 0 117s

pod/my-cluster-kafka-0 1/1 Running 0 3m3s

pod/my-cluster-kafka-1 1/1 Running 0 3m3s

pod/my-cluster-kafka-2 1/1 Running 0 3m3s

pod/my-cluster-zookeeper-0 1/1 Running 0 4m18s

pod/strimzi-cluster-operator-7b4f8f8496-gsnt5 1/1 Running 0 10m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/my-cluster-kafka-bootstrap ClusterIP 10.105.141.167 <none> 9091/TCP,9092/TCP,9093/TCP 3m4s

service/my-cluster-kafka-brokers ClusterIP None <none> 9090/TCP,9091/TCP,8443/TCP,9092/TCP,9093/TCP 3m4s

service/my-cluster-zookeeper-client ClusterIP 10.101.129.39 <none> 2181/TCP 4m19s

service/my-cluster-zookeeper-nodes ClusterIP None <none> 2181/TCP,2888/TCP,3888/TCP 4m19s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/my-cluster-entity-operator 0/1 1 0 117s

deployment.apps/strimzi-cluster-operator 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/my-cluster-entity-operator-8bd4548d9 1 1 0 117s

replicaset.apps/strimzi-cluster-operator-7b4f8f8496 1 1 1 10m

prometheus监控kafka

[root@master-0 ~]# git clone https://github.com/prometheus-operator/kube-prometheus

Cloning into 'kube-prometheus'...

remote: Enumerating objects: 18329, done.

remote: Counting objects: 100% (2775/2775), done.

remote: Compressing objects: 100% (222/222), done.

remote: Total 18329 (delta 2632), reused 2585 (delta 2546), pack-reused 15554

Receiving objects: 100% (18329/18329), 9.64 MiB | 7.30 MiB/s, done.

Resolving deltas: 100% (12260/12260), done.

[root@master-0 ~]# cd kube-prometheus/

[root@master-0 kube-prometheus]# git checkout release-0.9

Branch release-0.9 set up to track remote branch release-0.9 from origin.

Switched to a new branch 'release-0.9'

[root@master-0 kube-prometheus]# cd manifests/

[root@master-0 manifests]# cat prometheus-clusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

- services

- endpoints

- pods

verbs: # 这里要改

- get

- list

- watch

- nonResourceURLs:

- /metrics

verbs:

- get

[root@master-0 manifests]# kubectl apply -f setup/

namespace/monitoring created

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

service/prometheus-operator created

serviceaccount/prometheus-operator created

[root@master-0 manifests]# kubectl apply -f .

alertmanager.monitoring.coreos.com/main created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

servicemonitor.monitoring.coreos.com/prometheus-operator created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

创建kafka-podmonitor

# 注意ns,以及namespaceSelector

[root@master-0 ~]# cat strimzi-pod-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: cluster-operator-metrics

labels:

app: strimzi

spec:

selector:

matchLabels:

strimzi.io/kind: cluster-operator

namespaceSelector:

matchNames:

- kafka

podMetricsEndpoints:

- path: /metrics

port: http

---

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: entity-operator-metrics

labels:

app: strimzi

spec:

selector:

matchLabels:

app.kubernetes.io/name: entity-operator

namespaceSelector:

matchNames:

- kafka

podMetricsEndpoints:

- path: /metrics

port: healthcheck

---

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: bridge-metrics

labels:

app: strimzi

spec:

selector:

matchLabels:

strimzi.io/kind: KafkaBridge

namespaceSelector:

matchNames:

- kafka

podMetricsEndpoints:

- path: /metrics

port: rest-api

---

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: kafka-resources-metrics

labels:

app: strimzi

spec:

selector:

matchExpressions:

- key: "strimzi.io/kind"

operator: In

values: ["Kafka", "KafkaConnect", "KafkaMirrorMaker", "KafkaMirrorMaker2"]

namespaceSelector:

matchNames:

- kafka

podMetricsEndpoints:

- path: /metrics

port: tcp-prometheus

relabelings:

- separator: ;

regex: __meta_kubernetes_pod_label_(strimzi_io_.+)

replacement: $1

action: labelmap

- sourceLabels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

targetLabel: namespace

replacement: $1

action: replace

- sourceLabels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

targetLabel: kubernetes_pod_name

replacement: $1

action: replace

- sourceLabels: [__meta_kubernetes_pod_node_name]

separator: ;

regex: (.*)

targetLabel: node_name

replacement: $1

action: replace

- sourceLabels: [__meta_kubernetes_pod_host_ip]

separator: ;

regex: (.*)

targetLabel: node_ip

replacement: $1

action: replace

[root@master-0 ~]# kubectl apply -f strimzi-pod-monitor.yaml -n kafka

podmonitor.monitoring.coreos.com/cluster-operator-metrics created

podmonitor.monitoring.coreos.com/entity-operator-metrics created

podmonitor.monitoring.coreos.com/bridge-metrics created

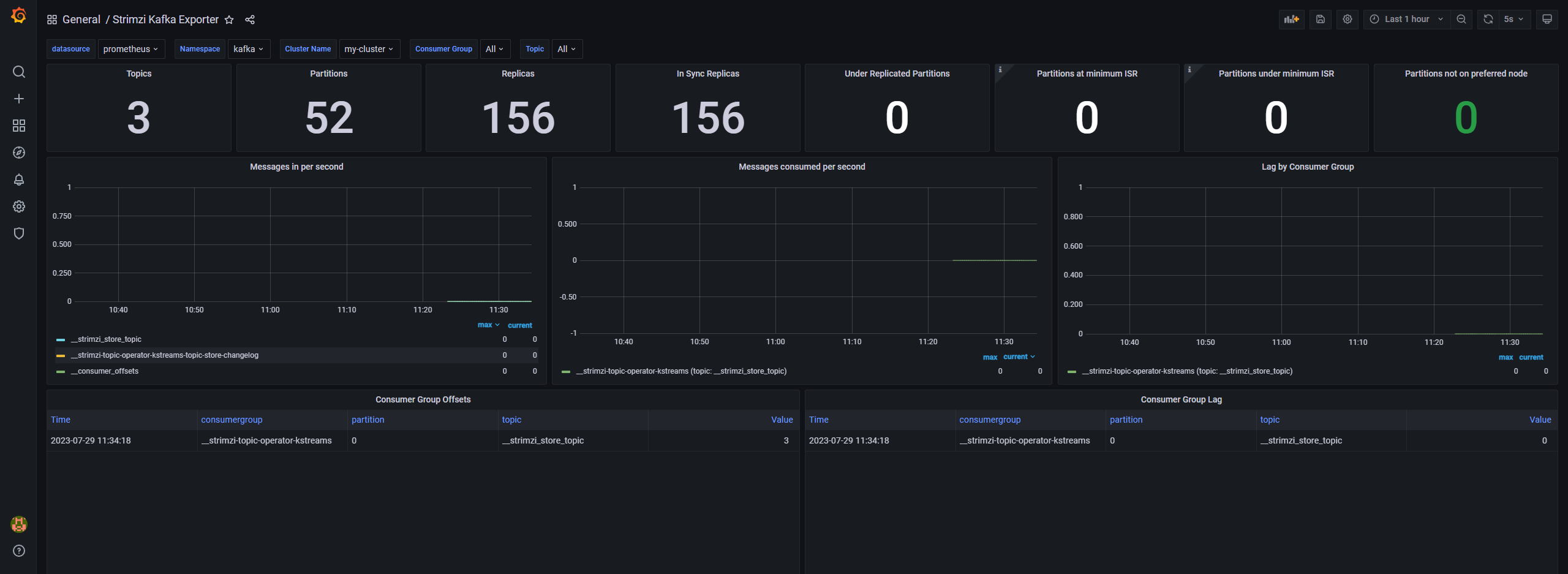

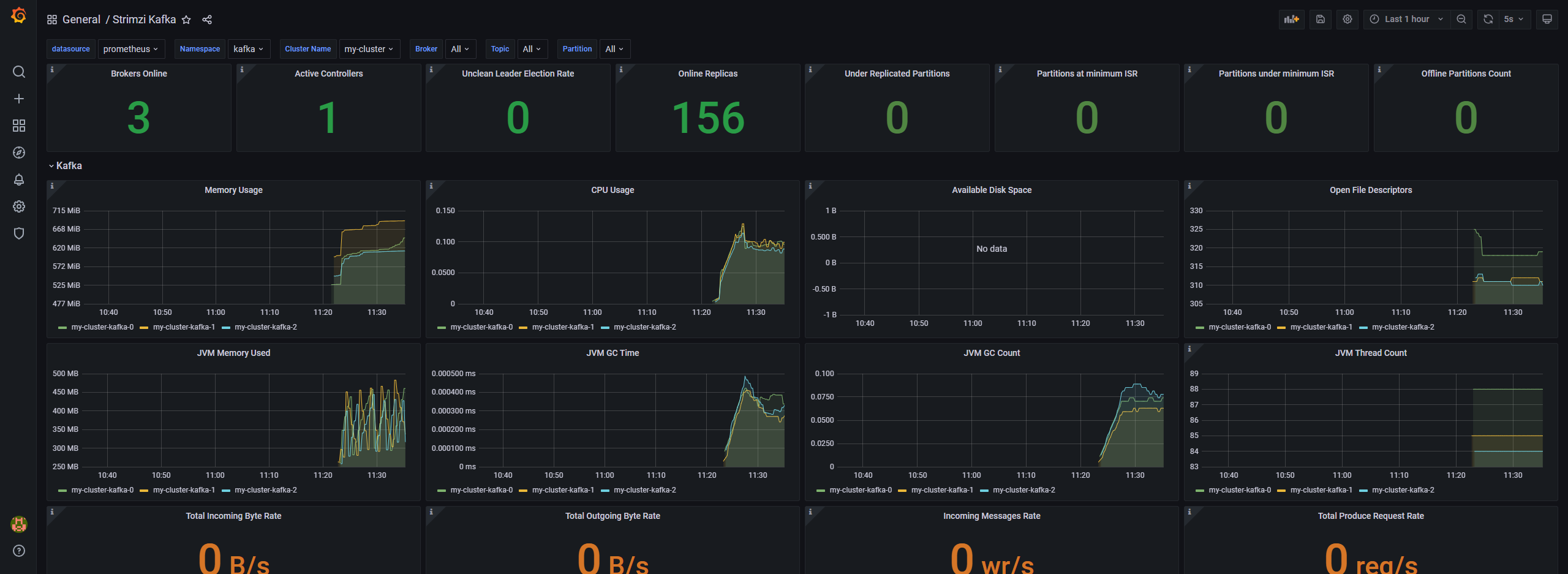

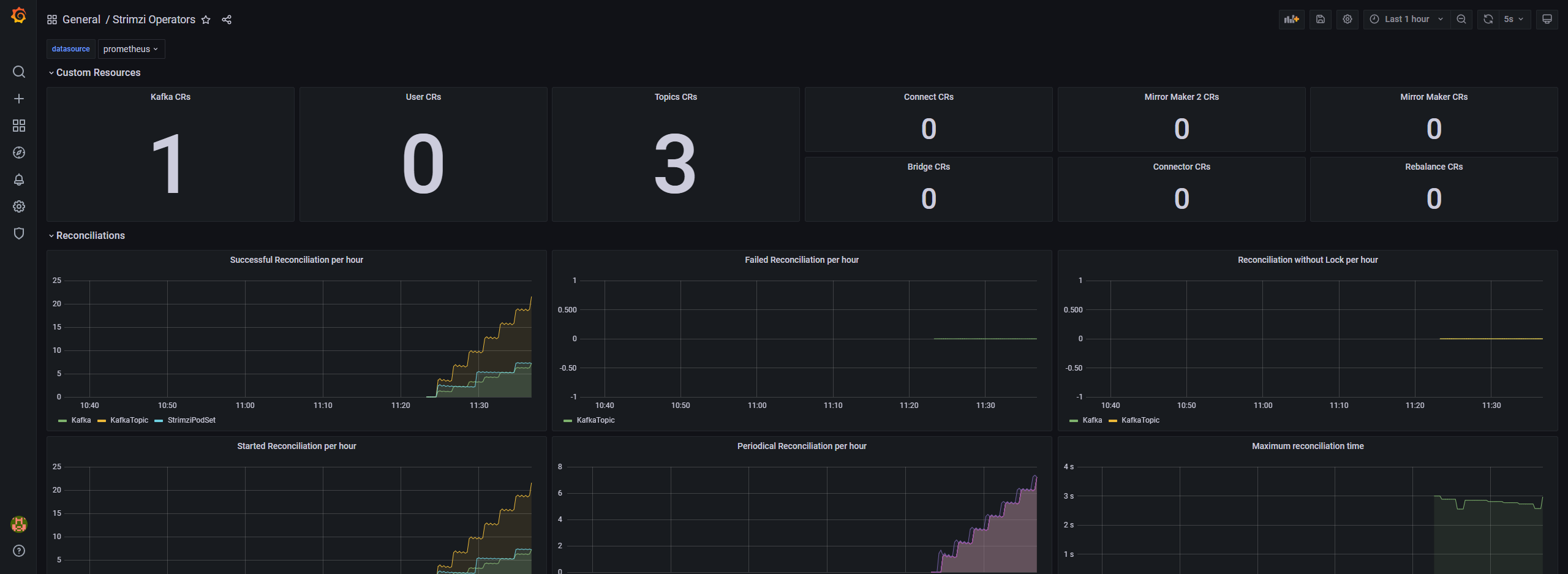

podmonitor.monitoring.coreos.com/kafka-resources-metrics createdgrafana面板

可以参考:https://github.com/strimzi/strimzi-kafka-operator/tree/main/examples/metrics/grafana-dashboards

kafka测试使用

创建topic

# 创建topics

kubectl -n kafka run kafka-perf -ti --image=quay.io/strimzi/kafka:0.35.1-kafka-3.4.0 --rm=true --restart=Never -- bin/kafka-topics.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic kafka_perf create --partitions 6 --replication-factor 3测试消息生产

# 生产消息测试

[root@master-0 ~]# kubectl -n kafka run kafka-perf -ti --image=quay.io/strimzi/kafka:0.35.1-kafka-3.4.0 --rm=true --restart=Never -- bin/kafka-producer-perf-test.sh --record-size 128 --print-metrics --producer-props acks=all bootstrap.servers=my-cluster-kafka-bootstrap:9092 --topic kafka_perf --num-records 50000000 --throughput -1

If you don't see a command prompt, try pressing enter.

702101 records sent, 140420.2 records/sec (17.14 MB/sec), 1037.7 ms avg latency, 1498.0 ms max latency.

1325612 records sent, 265122.4 records/sec (32.36 MB/sec), 944.0 ms avg latency, 1318.0 ms max latency.

1706516 records sent, 341235.0 records/sec (41.65 MB/sec), 481.1 ms avg latency, 831.0 ms max latency.

1545044 records sent, 308947.0 records/sec (37.71 MB/sec), 221.1 ms avg latency, 645.0 ms max latency.

1716130 records sent, 343226.0 records/sec (41.90 MB/sec), 207.1 ms avg latency, 588.0 ms max latency.

1579438 records sent, 315887.6 records/sec (38.56 MB/sec), 24.9 ms avg latency, 117.0 ms max latency.外部访问kafka

如果kafka集群需要外部访问,则需要在创建时指定通告地址

# 略

listeners:

- name: plain

port: 9092

type: internal

tls: false

- name: tls

port: 9093

type: internal

tls: true

- name: external

port: 9094

type: nodeport

tls: false

configuration:

bootstrap:

nodePort: 32094

- name: pubexternal # 公网访问的

port: 9095

type: nodeport

tls: false

configuration:

bootstrap:

nodePort: 32095 # nodeport端口

brokers:

- broker: 0

advertisedHost: 8.56.23.0 # 公网ip

- broker: 1

advertisedHost: 8.32.5.45 # 公网ip

- broker: 2

advertisedHost: 84.54.156.4 # 公网ip

# 略创建topic

使用TopicOperator来创建Topic

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaTopic

metadata:

name: test-topic #主题名称

namespace: kafka

labels:

strimzi.io/cluster: "my-cluster" # 集群名

spec:

partitions: 3 # 分区数

replicas: 1 # 副本数注意: 后续该topic分区数只能增,不能减少。而replicas不能修改。

创建user

使用UserOperator可以用来创建kafka用户

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaUser

metadata:

name: my-user

labels:

strimzi.io/cluster: my-cluster

spec:

authentication:

type: tls

authorization:

type: simple

acls:

# Example consumer Acls for topic my-topic using consumer group my-group

- resource:

type: topic

name: my-topic

patternType: literal

operation: Read

host: "*"

- resource:

type: topic

name: my-topic

patternType: literal

operation: Describe

host: "*"

- resource:

type: group

name: my-group

patternType: literal

operation: Read

host: "*"

# Example Producer Acls for topic my-topic

- resource:

type: topic

name: my-topic

patternType: literal

operation: Write

host: "*"

- resource:

type: topic

name: my-topic

patternType: literal

operation: Create

host: "*"

- resource:

type: topic

name: my-topic

patternType: literal

operation: Describe

host: "*"kafka broker配置

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: my-cluster

spec:

kafka:

# ...

config:

num.partitions: 1

num.recovery.threads.per.data.dir: 1

default.replication.factor: 3

offsets.topic.replication.factor: 3

transaction.state.log.replication.factor: 3

transaction.state.log.min.isr: 1

log.retention.hours: 168

log.segment.bytes: 1073741824

log.retention.check.interval.ms: 300000

num.network.threads: 3

num.io.threads: 8

socket.send.buffer.bytes: 102400

socket.receive.buffer.bytes: 102400

socket.request.max.bytes: 104857600

group.initial.rebalance.delay.ms: 0

zookeeper.connection.timeout.ms: 6000

# ...kafka jvmoption配置

# ...

jvmOptions:

"-Xmx": "2g"

"-Xms": "2g"

"-XX":

"UseG1GC": true

"MaxGCPauseMillis": 20

"InitiatingHeapOccupancyPercent": 35

"ExplicitGCInvokesConcurrent": true

gcLoggingEnabled: true

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站