共计 5633 个字符,预计需要花费 15 分钟才能阅读完成。

前几天在分析某影视短评,把数据导入了elasticsearch中,正好可以用elasticsearch来分析下,最后用kibana生成词云图

查看下当前数据量

GET /_cat/indices/scrapy_douban_movie_comments?v

# 输出

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open scrapy_douban_movie_comments SdX9pi7BQjKgRj1tcf91XQ 3 2 12185 4828 24.8mb 8.2mb看一下索引mapping

GET scrapy_douban_movie_comments

# 输出

{

"scrapy_douban_movie_comments" : {

"aliases" : { },

"mappings" : {

"properties" : {

"comments" : {

"type" : "text",

"analyzer" : "ik_max_word"

},

"createtime" : {

"type" : "date"

},

"date" : {

"type" : "date"

},

"title" : {

"type" : "text",

"analyzer" : "ik_max_word"

},

"updatetime" : {

"type" : "date"

},

"url" : {

"type" : "text"

},

"user" : {

"type" : "keyword"

},

"vote" : {

"type" : "integer"

}

}

},

"settings" : {

"index" : {

"creation_date" : "1671077371923",

"number_of_shards" : "3",

"number_of_replicas" : "2",

"uuid" : "SdX9pi7BQjKgRj1tcf91XQ",

"version" : {

"created" : "7070099"

},

"provided_name" : "scrapy_douban_movie_comments"

}

}

}

}看了下上面comments 这个字段存储了用户评论,博主已对它进行ik分词,但是此时kibana词云图中是无法选该字段,因为该字段为text类型,无法agg或sort操作,尝试在dev tools中执行聚合操作会报错

GET scrapy_douban_movie_comments/_search

{

"size": 0,

"aggs": {

"comments_terms": {

"terms": {

"field": "comments",

"size": 50

}

}

}

}

# 输出

{

"error" : {

"root_cause" : [

{

"type" : "illegal_argument_exception",

"reason" : "Text fields are not optimised for operations that require per-document field data like aggregations and sorting, so these operations are disabled by default. Please use a keyword field instead. Alternatively, set fielddata=true on [comments] in order to load field data by uninverting the inverted index. Note that this can use significant memory."

}

],

"type" : "search_phase_execution_exception",

"reason" : "all shards failed",

"phase" : "query",

"grouped" : true,

"failed_shards" : [

{

"shard" : 0,

"index" : "scrapy_douban_movie_comments",

"node" : "Vgd49fa4Qrir7oHXUGW_zw",

"reason" : {

"type" : "illegal_argument_exception",

"reason" : "Text fields are not optimised for operations that require per-document field data like aggregations and sorting, so these operations are disabled by default. Please use a keyword field instead. Alternatively, set fielddata=true on [comments] in order to load field data by uninverting the inverted index. Note that this can use significant memory."

}

}

],

"caused_by" : {

"type" : "illegal_argument_exception",

"reason" : "Text fields are not optimised for operations that require per-document field data like aggregations and sorting, so these operations are disabled by default. Please use a keyword field instead. Alternatively, set fielddata=true on [comments] in order to load field data by uninverting the inverted index. Note that this can use significant memory.",

"caused_by" : {

"type" : "illegal_argument_exception",

"reason" : "Text fields are not optimised for operations that require per-document field data like aggregations and sorting, so these operations are disabled by default. Please use a keyword field instead. Alternatively, set fielddata=true on [comments] in order to load field data by uninverting the inverted index. Note that this can use significant memory."

}

}

},

"status" : 400

}对于text类型字段,fiedlddata默认为false。此时我们可以将该字段得fielddata属性设置为true,设置之后该字段则支持agg查询。

同时我们对comments的分词需要定制下,因为使用的模式是ik_max_word,分词结果会存在单字的词,这不是博主想要的,双字词也会有很多无用的分词结果,所以最后决定将词数控制在4个或以上进行分词。

这里还出于另一个原因是:此时该字段agg操作运行后才会存储在heap内存中,而不是创建索引时就在内存生成,所以为减少heap占用,我们尽量减少无用的分词

注意:若是生产中则要重点关注text类型且fielddata为true的索引,避免其占用过多内存而导致OOM

同时集群中需要对fielddata做些限制:

GET _cluster/settings?include_defaults&flat_settings

# 输出

。。。。。。。略

"indices.breaker.fielddata.limit" : "40%",

"indices.breaker.fielddata.overhead" : "1.03",

"indices.breaker.fielddata.type" : "memory",

。。。。。。。略

"indices.fielddata.cache.size" : "-1b",

。。。。。。。略indices.fielddata.cache.size

控制为 fielddata 分配的堆空间大小, 默认情况下,设置都是 unbounded ,Elasticsearch 永远都不会从 fielddata 中回收数据。如果采用默认设置,旧索引的 fielddata 永远不会从缓存中回收! fieldata 会保持增长直到 fielddata 发生断熔,这样我们就无法载入更多的 fielddata。所以我们需要修改默认值(不支持动态修改),可以通过在 config/elasticsearch.yml 文件中增加配置为 fielddata 设置一个上限:

indices.fielddata.cache.size: 20%indices.breaker.fielddata.limit

fielddata 断路器默认设置堆的 40% 作为 fielddata 大小的上限。断路器的限制可以在文件 config/elasticsearch.yml 中指定,也可以动态更新一个正在运行的集群:

PUT /_cluster/settings

{

"persistent" : {

"indices.breaker.fielddata.limit" : "41%"

}

}1.重建新索引并设置mapping

此处我们定义了分词过滤器,只保留4字或以上的分词

PUT scrapy_douban_movie_comments_v4

{

"settings": {

"analysis": {

"analyzer": {

"ik_smart_ext": {

"tokenizer": "ik_smart",

"filter": [

"bigger_than_4"

]

}

},

"filter": {

"bigger_than_4": {

"type": "length",

"min": 4

}

}

}

},

"mappings": {

"properties": {

"comments": {

"type": "text",

"analyzer": "ik_smart_ext",

"fielddata": true

},

"createtime": {

"type": "date"

},

"date": {

"type": "date"

},

"title": {

"type": "text",

"analyzer": "ik_max_word"

},

"updatetime": {

"type": "date"

},

"url": {

"type": "text"

},

"user": {

"type": "keyword"

},

"vote": {

"type": "integer"

}

}

}

}2.索引重建

数据量也不多,作为测试使用,就不必设置size/sliced或其他配置。。。。

POST _reindex

{

"source": {

"index": "scrapy_douban_movie_comments"

},

"dest": {

"index": "scrapy_douban_movie_comments_v4"

}

} 创建完后我们可以测试下对该字段agg操作

GET scrapy_douban_movie_comments_v4/_search

{

"size": 0,

"aggs": {

"comments_terms": {

"terms": {

"field": "comments",

"size": 5

}

}

}

}

# 输出

{

"took" : 6,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 10000,

"relation" : "gte"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"comments_terms" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 10611,

"buckets" : [

{

"key" : "莫名其妙",

"doc_count" : 84

},

{

"key" : "imax",

"doc_count" : 64

},

{

"key" : "中规中矩",

"doc_count" : 64

},

{

"key" : "忍者神龟",

"doc_count" : 64

},

{

"key" : "analog",

"doc_count" : 57

}

]

}

}

}查看该索引中fielddata使用内存情况

{

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"_all" : {

"primaries" : {

"fielddata" : {

"memory_size" : "142.8kb",

"memory_size_in_bytes" : 146232,

"evictions" : 0

}

},

"total" : {

"fielddata" : {

"memory_size" : "321.6kb",

"memory_size_in_bytes" : 329336,

"evictions" : 0

}

}

},

"indices" : {

"scrapy_douban_movie_comments_v4" : {

"uuid" : "aW1mmy3WS5SZfg5XVQr-Cw",

"primaries" : {

"fielddata" : {

"memory_size" : "142.8kb",

"memory_size_in_bytes" : 146232,

"evictions" : 0

}

},

"total" : {

"fielddata" : {

"memory_size" : "321.6kb",

"memory_size_in_bytes" : 329336,

"evictions" : 0

}

}

}

}

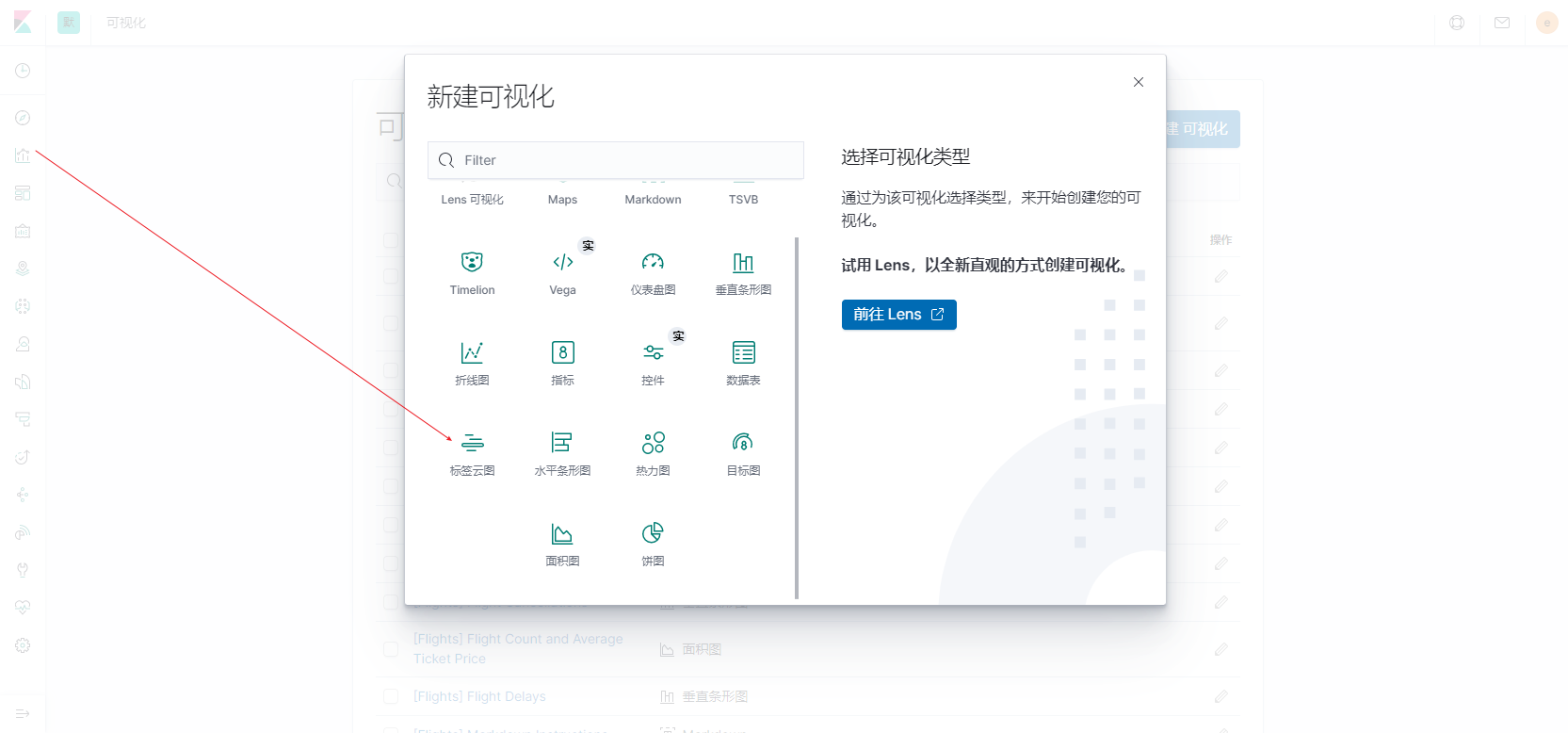

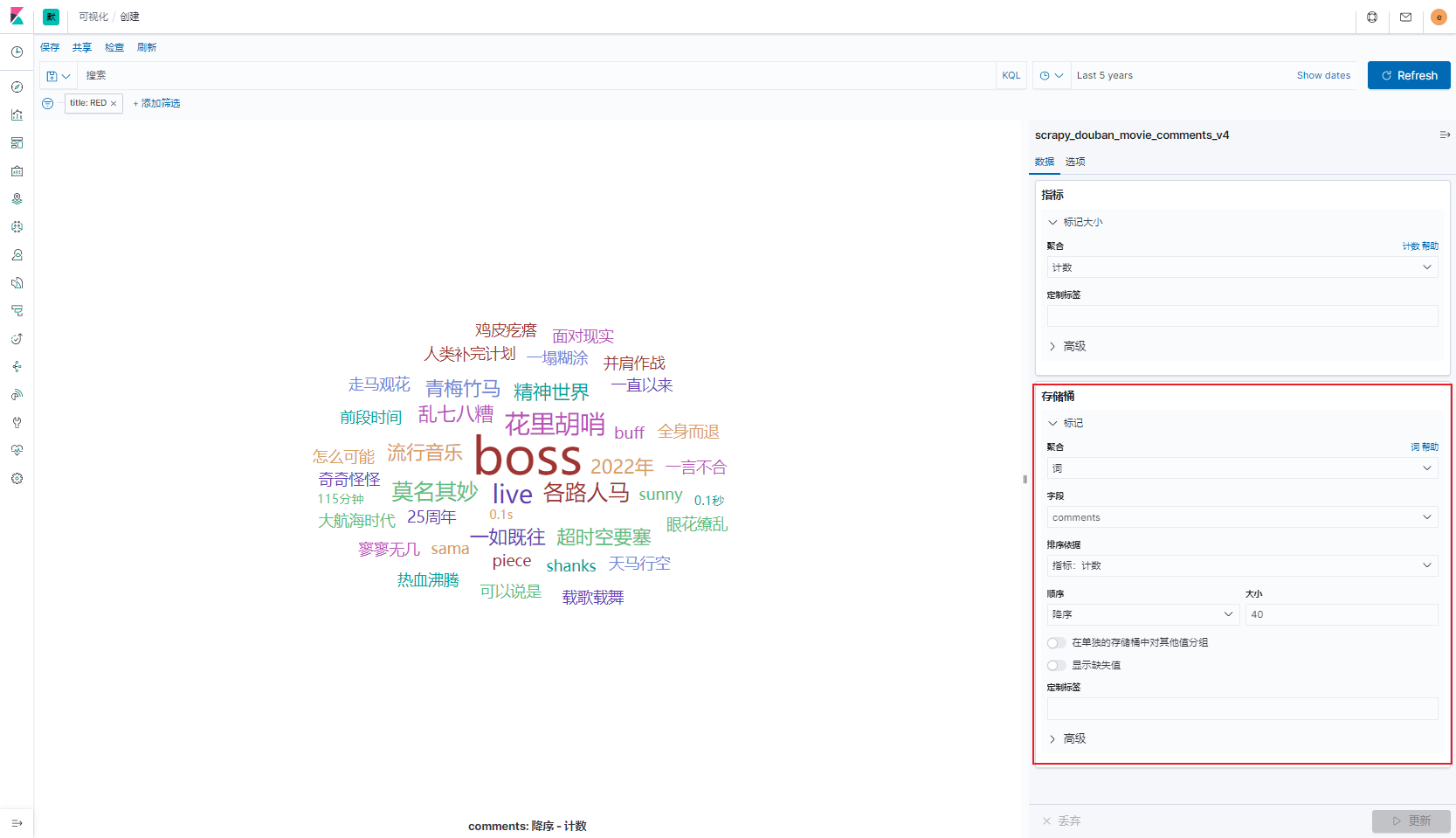

}3.kibana中创建词云标签图

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站